“The first rule of data science is: don’t ask how to define data science.” So says Josh Bloom, a UC Berkeley professor of astronomy and a lead principal investigator (PI) at the Berkeley Institute for Data Science (BIDS). If this approach seems problematic, that’s because it is—data science is more of an emerging interdisciplinary philosophy, a wide-ranging modus operandi that entails a cultural shift in the academic community. The term means something different to every data scientist, and in a time when all researchers create, contribute to, and share information that describes how we live and interact with our surroundings in unprecedented detail, all researchers are data scientists.

We live in a digitized world in which massive amounts of data are harvested daily to inform actions and policies for the future. We build sophisticated systems to collect, organize, analyze, and share data. We each have unlimited access to huge amounts of information and the tools to interpret it. We are more aware than ever how molecules and cells move, how inflation fluctuates, and how the flu travels, all in real time. We can efficiently distribute bus stations and plan transit schedules. With the right tools, we can predict how proteins misfold in our brains,

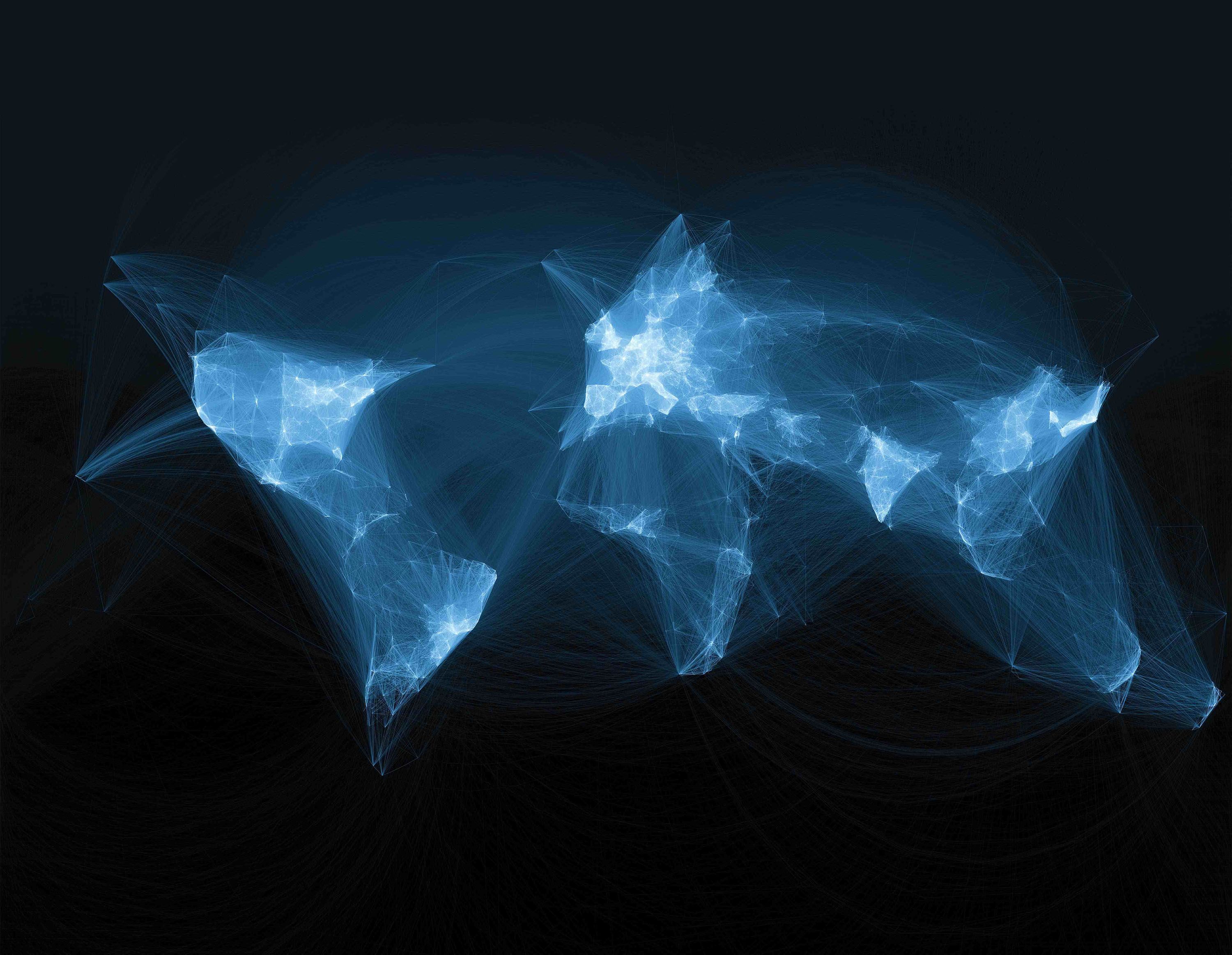

Scientific Collaborations at UC Berkeley. click here for an interactive version. credit: Natalia Bilenko

Scientific Collaborations at UC Berkeley. click here for an interactive version. credit: Natalia Bilenko

or what our galaxy might look like in a thousand years. In a society driven by data, knowledge is a commodity that is created and shared transparently all over the world. It connects causes with effects, familiar places with distant locales, the past with the future, people with one another.

In this rapidly changing world, universities are faced with the challenge of adapting to increasingly data-driven research agendas. At UC Berkeley and elsewhere, scientists and administration are working together to reshape how we do research and ultimately restructure the culture of academia.

So what is data science?

More than ever, researchers in all disciplines find themselves wading through more and more kinds of data. Frequently, there is no standard system for storing, organizing, or analyzing this data. Data often never leaves the lab; the students graduate, the computers are upgraded, and records are simply lost. This makes research in the social, physical, and life sciences difficult to reproduce and develop further. To make matters worse, it’s no easy task to build tools for general scientific computing and data analysis. Doing so requires a set of skills researchers must largely learn independently, and a timeframe that extends beyond the length of the average PhD.

Historically, no single practice described the simultaneous use of so many different skill sets and bases of knowledge. However, in recent years data science has emerged as the field that exists at the intersection of math and statistics knowledge, expertise in a science discipline, and so-called “hacking skills,” or computer programming ability. While these skills are changing the way that science is practiced, they’re also changing other aspects of society, such as business and technology startups. In a world where rapidly advancing technology is forcibly changing data science practices, universities are struggling to keep up, often losing good researchers to industries that place a high value on their computational skills.

Design: Natalia Bilenko, modified from Drew Conway; Book: MTchemik; network: Qwertyus

Design: Natalia Bilenko, modified from Drew Conway; Book: MTchemik; network: Qwertyus

Despite its increasing importance and relevance, it’s almost impossible to pin down what data science actually is. Data scientists hate doing it. Bloom describes data science as a context-dependent way of thinking about and working around data—a set of skills derived from statistics, computer science, and physical and social sciences. Cathryn Carson, the associate dean of Social Science who is heavily involved in BIDS and the new Social Science Data Laboratory (D-Lab), is more interested in how we can use the idea of data science to do more interesting science. This involves bringing people from different areas of expertise together to work on multifaceted problems. “It’s a kind of social engineering,” Carson says. “I don’t even know the ontology to describe it with. It’s not a discipline; it’s not a branch of science; it’s a platform for building a coalition. It's one of these interdisciplinary, non-disciplinary spaces where people get stuff done in interesting ways, but don't even know what to call it themselves.” Those involved in practicing data science may be researchers studying a specific topic of interest (domain scientists), or researchers who build powerful computational tools (methodologists).

What do researchers think? Dav Clark, a data scientist at D-Lab and psychologist by training, says that “data science is a meaningless term that means different things to different people.” His colleague Nick Adams, a senior graduate student in sociology, describes data science as “a social construction, a convenient arena for the mixing of domain scientists and people who know what to do with the computers.” The D-Lab, a bright green space on the third floor of Barrows Hall, is a resource for those navigating data-intensive social science who want to pick up some new skills, crunch numbers together, or plan their research with friends. It is funded by the Office of the Vice Chancellor for Research and is a multi-disciplinary, multi-organizational effort.

For Clark and Adams, data science is about building bridges between different disciplines to achieve a more complete understanding of the universe. It’s about providing computational training to researchers and building computational tools that can be useful for broad audiences. Clark notes that one important part of practicing data science is the development of a standard set of practices across campus and research communities, which could improve data accessibility and enable transparent, reproducible research. “The actual term ‘data science’ is one of those terms that mean everything and nothing at the same time,” says Adams.

At the Simons Institute for the Theory of Computing, data science is something more technical. Located in the newly remodeled Calvin Hall, the institute is taking shape as a place for scholars from around the world to tackle fundamental mathematical and computational problems in the form of semester-long programs, funded by a $60 million grant from the Simons Institute. (see The Future of Computing) The researchers at the Simons Institute investigate purely academic computer science problems by bringing together the world’s experts in theoretical computer science and other fields to explore unsolved problems, according to Bloom. There are two programs planned for the next six months: evolutionary biology and the theory of computing, and quantum Hamiltonian complexity. While very different scientifically, both of these fields require highly sophisticated theoretical and computational modeling—the Simons Institute’s specialty.

Anand Bhaskar, a research fellow at the Simons Institute who is working on the evolutionary biology problem, says, “‘Data science’ and ‘big data’ are popular terms for the application of statistics and computer science to analyzing the large amounts of data and learning about the underlying processes generating the data that we’re seeing today in various domains, such as e-commerce, biological sequencing studies, sensor networks, et cetera.” He gives an example from biology: with advances in DNA sequencing technology, now-accessible full genome sequences hold the keys to a better understanding of evolutionary processes and genetic variations that give rise to diseases. However, handling and analyzing the resulting exponentially larger data sets presents new technical challenges. Such computational problems could entail weeks of computing time per calculation if traditional methods were simply scaled up. Realistically, handling large data sets requires the development of scalable tools, techniques, and algorithms to effectively use computer processing and memory. The Simons Institute is a place for non-traditional interactions to occur—between methodologists and domain scientists, mainstream theoretical computer scientists and biologists. These researchers, who are working on both sides of the problem, can collaborate and introduce their respective ideas and research tools to one another.

Data science at UC Berkeley

Data science on UC Berkeley's campus. click here for an interactive version. credit: Natalia Bilenko

Data science on UC Berkeley's campus. click here for an interactive version. credit: Natalia Bilenko

A dedicated and diverse community of scientists, administrators, and funding institutions at UC Berkeley are reshaping the culture of research by establishing best practices in data science and testing the limits of computation. Several organizations apart from the D-Lab and the Simons Institute have a hand in data science on campus, and while data science may have recently emerged as a hot field, many of these data-focused organizations are not new. The Data Lab in the Doe Library has been available to help students locate and analyze data since October 2008. The Department of Statistics has long hosted consulting-style office hours for researchers in other fields. In addition to these and other university-wide initiatives, many departments have their own workshops and seminar series to arm researchers with field-specific skills to attack data-heavy research questions.

Now in its inaugural year, BIDS is a multifaceted, collaborative effort by professors and scientists from diverse disciplines across campus, the UC Berkeley library, the Gordon and Betty Moore Foundation, and the Alfred P. Sloan Foundation. BIDS leaders hope to extend their ambitious agenda beyond the five years of funding they have received. They are establishing a presence in the Doe Library, which is both a strategic and symbolic move—the library is physically in the middle of campus, and as the central library, it is by definition an interdisciplinary space.

Other groups that practice data science on campus focus on specific fields and problems. The AMPLab (short for “Algorithms, Machines, and People”) was established in 2011 specifically to deal with big data problems. AMPLab is a government- and industry-funded collaborative research team that focuses on creating powerful analytics tools combining machine learning, cloud computing, and crowdsourcing to solve big data problems. Its faculty and students mostly belong to the Department of Electrical Engineering and Computer Science. In the social sciences, the Social Science Matrix (SSM) is another new center dedicated to fostering creative, interdisciplinary research at Berkeley. It funds over 60 research projects, working groups, and research centers around campus, many of which are interdepartmental collaborations. Additionally, the School of Information recently rolled out a new Master of Information and Data Science (MIDS) program. Its curriculum is designed to prepare students to solve real world problems involving complex data.

The evolving role of data in science

No matter how you define it or where you do it, data science is pervasive, multifaceted, and radically changing how we do good research. In the social sciences, modern research problems demand analysis beyond traditional statistical hypothesis testing. Students are increasingly faced with the prospect of building their own analysis software and methodologies. In the life sciences, vast quantities of data generated by new DNA, RNA, and protein sequencing technologies have engulfed biologists and chemists, who rarely have training in statistics or computer science. And physicists, who traditionally have the most computational training, are tackling data sets orders of magnitude larger than the previous generation of researchers ever dealt with. As Bloom says, “big data is when you have more data than you’re used to.”

Take, for example, Adams’s research project. To understand interactions between police and protesters during Occupy movements, he used event data collected from newspapers in 190 US cities. According to Adams, a study on this topic by a lone researcher would traditionally be limited to four to eight cities, with the researcher’s brain serving as the main computational tool. Moreover, this approach would introduce the researcher’s own biases into the study, and its limited nature would lend the findings little overall impact. Worse, natural language parsing technology is unreliable in extracting contextual meaning from digitalized texts—all of the data would have to be collected manually. To this end, Adams has trained a team of undergraduates to pore over published articles and record details about Occupy-related events.

However, the team realized that the project required massive outsourcing to complete. “Amassing a rich database capable of explaining the factors affecting protest/police interactions will require making hundreds of thousands of discrete judgments about what the text in newspaper articles means,” says Adams. To collect all the data, Adams is using internet crowdsourcing to query hundreds of thousands of strangers and crosschecking these large-scale results with those the team has collected manually. In the future, it’s possible that a similar study could use Adams’s data as a training set for an algorithm to make predictions about protest movements. Adams notes that the same experimental design could be applied across the social sciences.

An increased volume of data, compounded with inherent heterogeneity, is also a problem in evolutionary biology. Biologists have more data to conduct detailed studies in genetics, protein structure and function, and bioinformatics, but they often lack the necessary computational skills. Kimmen Sjölander is a professor of bioengineering and a lead PI at BIDS, and her lab uses enormous datasets to understand the fundamental principles underlying the evolution of species and gene families. Some of the algorithms that the Sjölander lab and others use have arisen within their respective areas of study. Others, however, have been adapted to the field, such as hidden Markov models. This method uses probability theory to distinguish changes in a sequence of data, and was originally used for speech detection. The Sjölander lab has adapted it to detect protein sequence homologies, which is useful in modeling protein structures and interactions that are important for understanding diseases and designing drugs.

The large datasets procured and made public by thousands of research groups, as well as software and algorithms developed by the Sjölander lab and others, are increasingly necessary in biology and biochemistry research despite being incomplete. As an example, the heavily-used protein data bank UniProt receives millions of dollars annually in government funding and input from thousands of researchers around the world, but that isn’t enough to prevent frequent errors in sequence annotation. Biologists around the world rely heavily on the sequences in protein data banks, and an incorrect sequence could put someone back months or even years in his or her experiments. “In the social sciences and physical sciences, it’s more likely that a PhD student or a postdoc can write a script to analyze a problem and it will be sufficient,” Sjölander says. “In the life sciences, we have new, constantly changing datasets with noisier data that is more heterogeneous.”

design: Natalia Bilenko; database: linecons; Sherlock: Rumensz; tower: Mungewell;

design: Natalia Bilenko; database: linecons; Sherlock: Rumensz; tower: Mungewell;

However, the rewards of taking a computational approach to solve a problem in biology can be substantial. For instance, Bhaskar has developed an algorithm that can use genome sequence data from a large number of people to estimate population historical sizes, as a step towards learning about huge demographic changes in history that have guided modern human genetics. While it might be intuitively obvious that there is more genetic diversity in large populations as opposed to small ones, Bhaskar and his colleagues formalized that idea in a statistical model that describes how genome sequences have evolved. They developed algorithms to infer historical population sizes from large numbers of human genomes. “Projects like these regularly involve tools from a number of areas such as applied probability, stochastic processes, linear algebra, combinatorics, and machine learning, among others. In fact, several of the central statistical models used in modern evolutionary biology were developed by eminent probabilists who were approaching this from a purely theoretical perspective,” says Bhaskar.

Finding the right tools for the job

With researchers in every field working on their own computational methods to address problems that are both field-specific and interdisciplinary, the academic landscape of data science is mushrooming with opportunities and obstacles. A major concern for researchers, and a stated goal of D-Lab and BIDS, is building computational tools and making them available and easy to use to enable well designed and reproducible scientific studies.

Fernando Perez promotes this view. Perez is a lead PI at BIDS and the founder of IPython, an interactive computing environment used by researchers around the world. His role at BIDS is twofold: developing computational tools and platforms, and enabling open and reproducible science. Though Perez’s formal post is in the Brain Imaging Center, his passion is open source computational tool development. Open source software is accessible for anyone to use under a free license, and many open source platforms encourage the input of the community, allowing for further improvements. The open source model surfaced in many technological communities in response to proprietary software owned by corporations, as a way to combat prohibitive expenses and to build a community based platform for software development. Over the last decade, Perez has accumulated experience in developing numerical tools, data visualization tools, and statistical tools in addition to further developing IPython.

Fernando Perez, lead PI at BIDS and creator of IPython, demonstrates brain imaging analyses performed using the IPython Notebook, an interactive web-based computational environment.

Fernando Perez, lead PI at BIDS and creator of IPython, demonstrates brain imaging analyses performed using the IPython Notebook, an interactive web-based computational environment.

“IPython was my PhD procrastination project. I said it would be just an afternoon hack, and that afternoon hack twelve years later is now a large effort,” says Perez. “There are multiple people who work full-time on it. It has been far—by many, many, many orders of magnitude—more impactful than my dissertation.”

The tools that Perez and the IPython team create are varied: some are generic machine learning programs that are useful across disciplines, while others are specific to particular types of data, such as brain imaging. They are all high quality, powerful, and widely used, but it took over a decade for like-minded scientists to develop them. The scientists are, for the most part, graduate students and postdoctoral researchers who take an interest in building computational tools as well as contributing to a now well established community. Perez says, “I’ve been very active in the space of creating both the technical tools and the community of scientists who develop these tools in an open, collaborative model. It has been a very interesting journey because I came from a space where we tend to use mostly proprietary software for doing research, and I was told by my seniors at the time that I should just get on with my life and stop creating these tools and instead I should just write more papers.” He explains that he ignored the advice because of the clear value of open source tool development, and instead devoted more and more time to that. Perez claims that “even at some costs to my career, I still think it was the right thing to do.”

Clark and researchers at the D-Lab do their own share of tool development, congregating in a group known as the Python Workers Party with members of the IPython team and other graduate students and research staff. Clark has a personal interest in through-the-web access for data science software and about ten ongoing side projects at any time. The PWP has a relaxed, study hall feel, and everyone works on their own projects at their own pace.

The Sjölander lab has also built open-source software as well as a protein family database, which is widely used by researchers around the world. Sjölander firmly advocates for better databases and infrastructure, as well as a cultural shift within academia.

“Many people think that if you have open source software and shared data sets, it will solve everything. That’s not true. It’s part of the solution, not the whole solution,” says Sjölander. “The problems in biology are so huge that individual investigators can’t solve the challenges in their own labs; we need a significant change to the infrastructure for biological research in this data science era.”

Changing the academic landscape

Sjölander is not alone in the belief that cultural changes are necessary to achieve meaningful advances in data science, and vice versa. Slowly, a paradigm shift may be occurring. There is a growing public interest in reproducible research and open science, which is mirrored by vocal faculty and students at universities and research institutions. This is perhaps best demonstrated by recent support for open access journals. The Moore and Sloan Foundations, which together provided $37.8 million in funding to UC Berkeley, New York University, and the University of Washington, believe in the new model. So does the administration at UC Berkeley, which is providing the funding to transform space in the library for BIDS.

Design: Natalia Bilenko; company logos: Linkedin Corporation, GlaxoSmithKline, Microsoft Corporation, Facebook Inc, Apple Inc

Design: Natalia Bilenko; company logos: Linkedin Corporation, GlaxoSmithKline, Microsoft Corporation, Facebook Inc, Apple Inc

“This purely technical work has had ramifications in the culture of science and the culture of communication of science and that’s part of the broader picture for us at BIDS,” says Perez. “BIDS is not only about developing algorithms and tools and answering specific questions, it’s also about taking the bigger view of how is science changing today in all of these different ways.”

This broader picture has potentially transformative consequences. To support data scientists that develop broadly useful tools and to enable consistent, reproducible, open science, publication policies for engaging with society have to change. To reward scientists doing more collaborative data science, successful collaboration should be valued as an outcome independent of their publication records. Education needs to change to reflect a growing emphasis on building and using computational tools across fields to support the next generation of researchers.

BIDS has made such deep-seated institutional changes a priority. The Moore and Sloan foundations, joining forces with the principal investigators at the three schools, have identified six areas that need attention and work. According to Carson, these are: academic career paths and reward structures; software tools and environments; training; culture and working space; open, reproducible, collaborative science; and ethnography and evaluation (an attempt to understand the character of data science communities). For each of these areas, the group will define “alternative metrics” to determine progress toward their goals. For example, success in training might be measured by the number of students who attend Python workshops, or publications resulting from these and other trainings. Other metrics might be more difficult to define.

Already, departments as well as institutions such as BIDS and D-Lab are devoting resources to further the computational skills of graduate students and researchers. BIDS, D-Lab, and the Simons Institute plan courses, seminar series and workshops to help train researchers to effectively communicate with each other as well as build, edit, and use programs. Their efforts are complemented by a popular three day boot camp and semester-long Python course run by Bloom and the intensive courses and office hours run by Clark. Sjölander supports the integration of statistics, algorithms, data structures, and machine learning into traditional biology and chemistry undergraduate curricula to cope with modern research practices. However, life sciences curricula are already packed with classes. “Biology students are trained to memorize tons of stuff,” says Sjölander.

Python Workers Party, a weekly workshop for Python programmers, meets on Friday afternoons at D-Lab. Here, the coders gather around Paul Ivanov, IPython developer at the Brain Imaging Center. credit: Angela Kaczmarczyk

Python Workers Party, a weekly workshop for Python programmers, meets on Friday afternoons at D-Lab. Here, the coders gather around Paul Ivanov, IPython developer at the Brain Imaging Center. credit: Angela Kaczmarczyk

“[Students] will need to use these tools effectively…while being cognizant that they still have to have their knowledge in their own domain and discipline, which is time consuming and a huge demand on their time,” Perez notes, explaining that “the day still has 24 hours and the week still has seven days, so how do we add this component to the training and education of the incoming generations so that they can be effective in doing good science under the constraints of reality?” While better software, financial and intellectual support, and better training will help, Perez concludes, “people have one brain and one pair of eyes and that isn’t changing just because we have a lot of data. That is the bigger picture. That’s what makes it an interesting and unique project.”

A brave new world of data science

Ultimately, for data science to be successful at UC Berkeley, researchers must collaborate to get results. Carson, Perez, Sjölander and Bloom recognize the importance of achieving a dialogue between people who understand the nature of the scientific problem and the computationally savvy developers who are designing tools to analyze data. Often, collaborations fail because of a divergence of incentives for those working on the two sides of the project. Perez says, “both ends of that conversation often end up unhappy. The methodologists feel that they are just being treated like a tool, and the domain people think that the methodologists don’t really understand their problem.” One of the challenges in data science is improving the dialogue on both sides to achieve a valuable outcome: meaningful and efficient science.

“We recognize inefficiencies in the academic setting—there are a lot of reinventions of the wheel. As a scientist in a specific domain, you might not even know that there are tools out there to solve a problem,” says Bloom. He notes that an hour-long meeting with an expert in the field may save you two years of trouble by way of collaboration.

But for a successful collaborative model, the changes required may be radical. “You have to change how tenure is granted and how hiring committees work,” says Sjölander. “There are actually huge structural and sociological changes required before we get the full advantages of open and collaborative science.”

Bloom agrees, saying, “we need to find pathways for people who are contributing to science but not necessarily writing papers. How do we compare someone who wrote 100 papers with someone who has written 100,000 lines of code? How can we promote these people? The goal is having an ecosystem of people who know how to interact with each other, building up the organizational know-how to enable great results, and exporting what we find to other universities.”

How does one measure progress when the goal is not only writing many good scientific papers, but forcibly updating deeply entrenched ivory tower practices? In a scientific culture that often values novelty over utility and individual glory over fruitful collaborations, academics and university administrators must make significant changes to ensure that their institutions remain relevant in a data-driven world. Data scientists have a few ideas, but it remains to be seen how their plans play out. As Sjölander says, “BIDS is an idea, a philosophy. Our success will be measured by the impact we have on Berkeley culture. We need a revolution!”

featured image: Map of scientific collaborations from 2005 through 2009, based on Elsevier’s Scopus database. credit: Olivier H. Beauchesne

This article is part of the Spring 2014 issue.