Climate ChangeandScience and Society

Environmental justice is rooted in science

How air quality modeling informs equitable environmental policy

“We don’t all breathe the same air.” That’s how Dr. Yuzhou Wang began to describe the driving force behind her research, as we talked at an outdoor café on the UC Berkeley campus last January. It was unseasonably dry—but thankfully our sky was free of any haze—and under the northern California sun Dr. Wang passionately explained why she has chosen to work at the intersection of air quality modeling and public policy. She wants to make sure that no one—no matter who or where they are—is harmed by the air they breathe.

To ensure that the air is safe, we need a reliable way to know what’s in the air and a systematic method to determine what qualifies as unsafe. In the United States, the air in any region is officially certified to be clean enough to breathe through the National Ambient Air Quality Standards (NAAQS). These standards have been set by the Environmental Protection Agency (EPA) since the passage of the Clean Air Act in 1970 and lay out maximum acceptable concentrations for six major airborne pollutants. Among the six are chemicals like lead and carbon monoxide that can have significant effects on health and are likely to be produced as byproducts of manufacturing or the combustion of fossil fuels. A network of federal monitoring sites tracks the concentrations of these pollutants, and if the three-year average concentration at a site is found to be higher than the standard, the state and local government associated with that site must design and implement a plan to lower emissions.

This system has been effective in lowering air pollution levels in the United States over the past 55 years, but it has its limitations. Consider the class of pollutants monitored by the EPA called PM2.5. That stands for “particulate matter” less than 2.5 micrometers in diameter—around five to ten times smaller than a typical cell in your body. This size of particle can be particularly damaging to your heart and lungs, so PM2.5 has been the subject of a lot of recent research. In 1971, the EPA’s standard for PM2.5 was 75 micrograms per cubic meter (µg/m3), but it has been lowered several times since then, most recently in February 2024 to 9 µg/m3. The dramatic change over the decades reflects both that we have learned more about the dangerous health effects of PM2.5 and that stricter standards have become feasible due to regulations in manufacturing, transportation, and energy production. However, lowering the standard in just the past year suggests that we have not yet accomplished the goal of clean air for all. Indeed, 9 µg/m3 is still higher than the 5 µg/m3 guideline set by the World Health Organization, and it has been estimated that between 47,000 and 460,000 premature deaths were caused by PM2.5 in the United States in 2019 alone. Moreover, the adverse health effects of poor air quality in the United States are felt much more strongly by some populations than others.

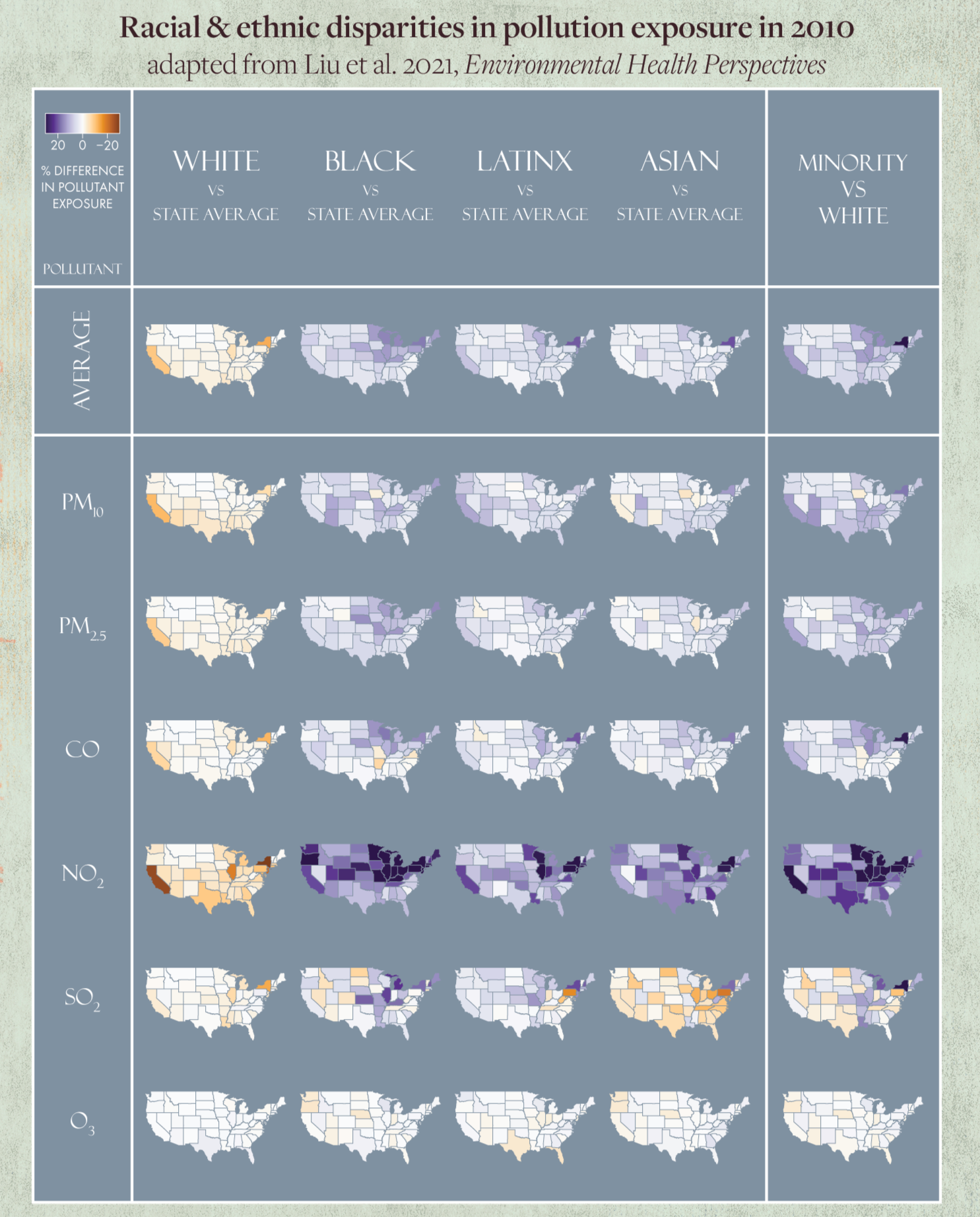

Average exposure levels to many pollutants, including PM2.5, have been measured to be higher in low-income populations and communities of color. Even when controlling for socioeconomic factors, race and ethnicity are still correlated with exposure to pollutants, which is widely acknowledged as the result of historically racist housing practices. For example, housing developers in the 1900s formed agreements to prohibit the sale of homes to certain racial and ethnic groups, and bankers set conditions on home loans within certain neighborhoods based on race and ethnicity. Over time, more polluting infrastructure was built in the neighborhoods with large low-income and non-White populations because political influence was often concentrated in wealthy, White communities. While absolute levels of air pollution have steadily dropped in the past decades, the relative disparities based on race and income persist.

In general, the challenge of correcting racial and economic injustices requires the dedicated effort of activists and political organizers, but, when it comes to air quality, it also necessitates the methodical work of scientists. Researchers need to make precise measurements of pollutants in the field, build complex computational models to assess how pollutant concentrations might change, and analyze in detail the existing law and environmental infrastructure to come up with feasible and effective policy recommendations. Each of those tasks requires its own expertise and must be performed with scientific rigor to obtain meaningful results. Still, some research groups straddle multiple realms within this broad field and solve air quality problems from the ground up. I spoke with several researchers to figure out exactly how to put the data, the law, and the computational methods together to discover how we can make the air safer to breathe for us all.

How to know if the government got it right

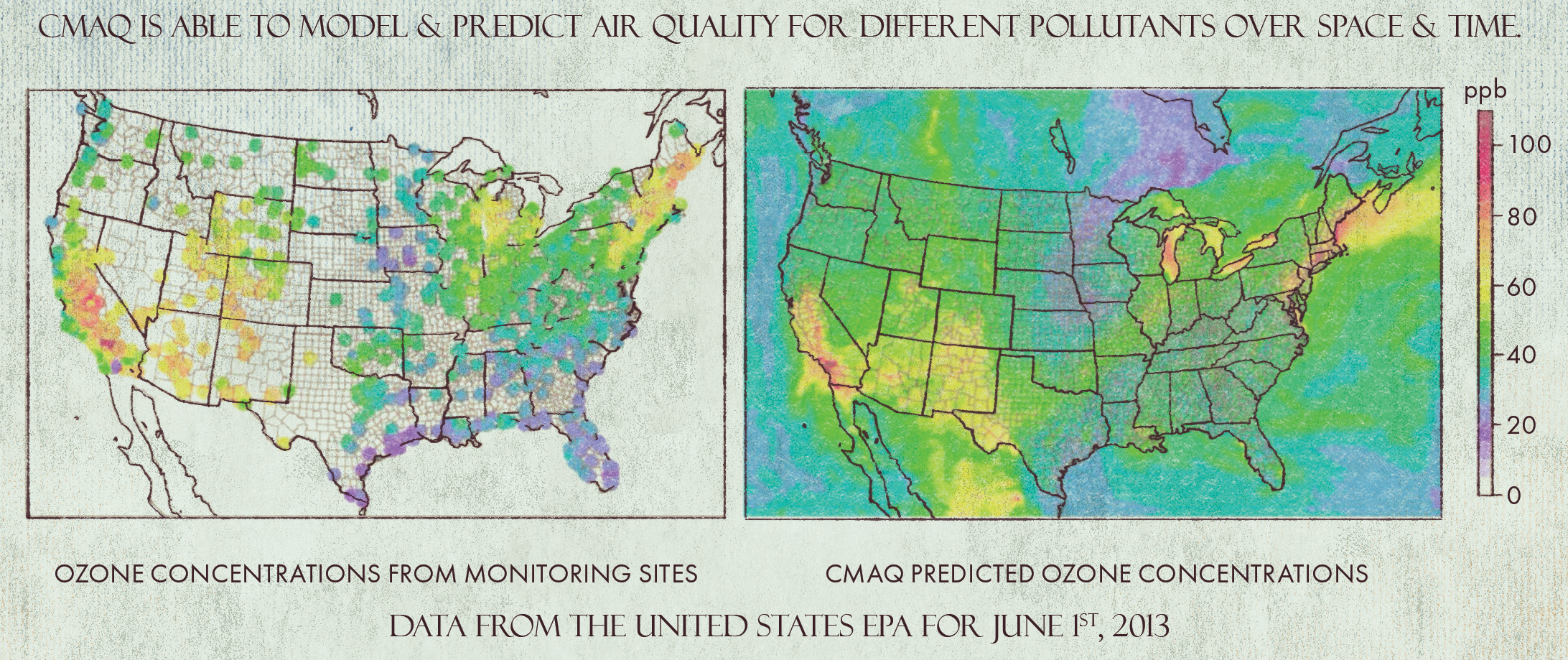

When the EPA decides to lower the maximum acceptable standard for the concentration of a pollutant, it seems natural to expect that air quality would improve. But in February 2024, when Dr. Wang heard the news about the new PM2.5 standard, she wondered whether it would have any effect at all. She decided to determine if the current network of monitoring sites used by the EPA had the capacity to accurately measure whether all areas in the United States would be above the new threshold. She found that it did not.

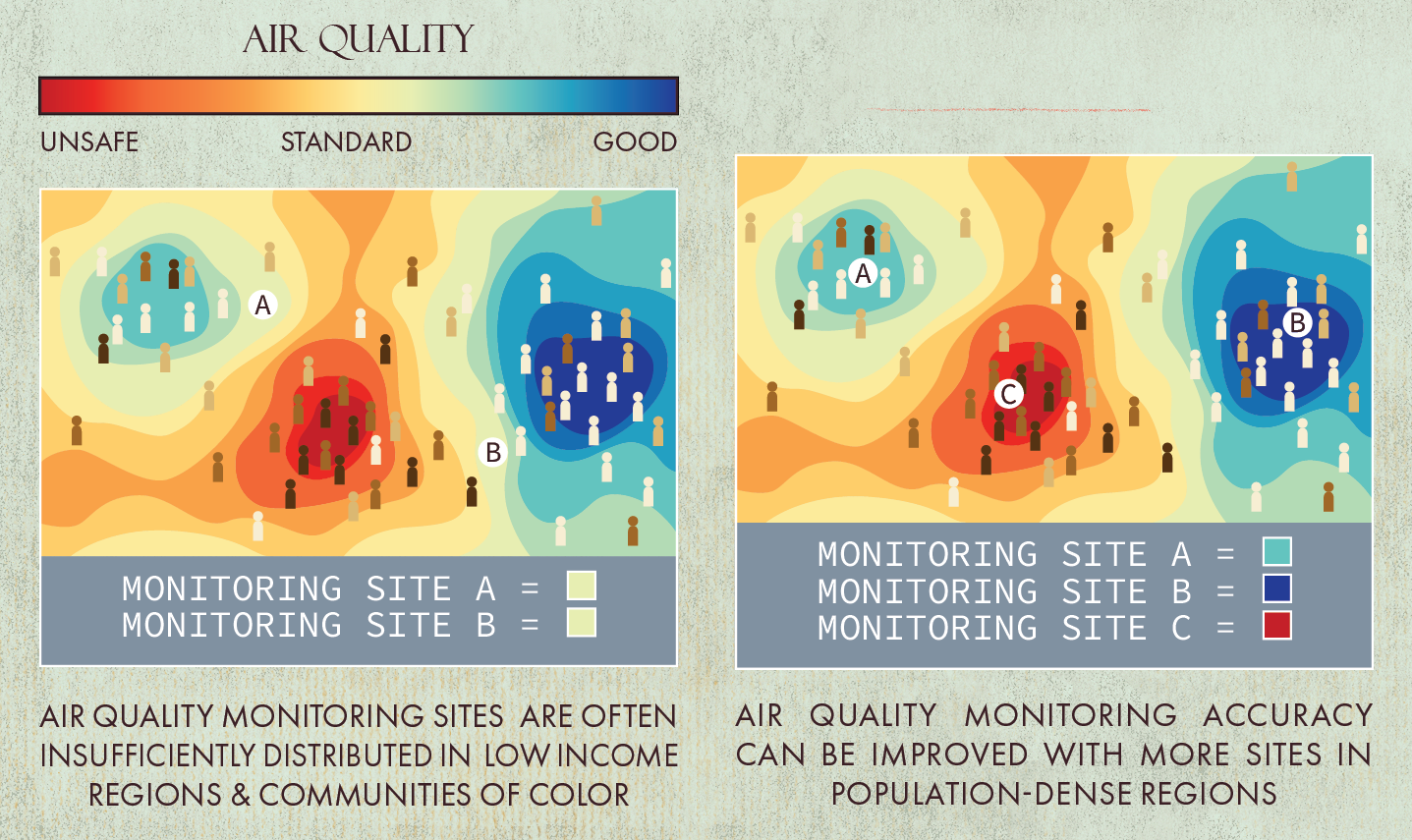

The federal monitoring network measures the concentration of a pollutant at a single point and uses that measurement to determine if a region surrounding the site has exceeded the standard. In October 2024, Dr. Wang published a paper with University of Washington Professor Julian Marshall and UC Berkeley Professor Joshua Apte, showing that if the EPA were to implement the new standard for PM2.5 without adding any additional monitoring sites, the network would likely fail to properly determine who lives in polluted areas. The authors estimated that up to 44 percent of people living in areas that exceed the new standard would be misclassified as living in regions with safe air quality. This discrepancy comes from the inherent limitations of single-point measurements. While measurements at a single site are simple and direct, without a dense and even distribution of monitoring sites around population centers, measurements alone will miss abrupt changes in concentration that can occur under certain atmospheric conditions. Pollutants interact with each other and undergo chemical reactions as they move through the atmosphere. They flow through the air in complex ways that in principle depend on the concentration of every chemical species at each location in space. For example, if you draw a line between two monitoring sites that are sufficiently far away from each other, the concentration of a pollutant at the midpoint of that line might be much higher than the standard even while both monitoring sites measure the concentration to be in the acceptable range.

To determine if the monitoring sites are in fact too far away from each other you need to use computational tools. Dr. Wang’s work used a computational model that makes statistical predictions based on data from satellite images. From the images, one can estimate the height of columns of pollution produced by industrial sites and—combining these observations with additional data on traffic networks and population density—predict the concentration of PM2.5 at any location. Even using the model’s most conservative estimates for PM2.5 concentration, Dr. Wang found that substantial portions of the US population breathe air containing unsafe levels of pollutants yet would be classified by the EPA as living under acceptable air quality conditions. Furthermore, the populations most likely to be misclassified are exactly the populations that are already breathing disproportionately polluted air: low-income communities and communities of color. Thus, a policy that on its surface seems harmless could exacerbate air quality disparities. If the new standard were to be implemented without any additional monitoring sites, more resources would be poured into regions already covered by the monitoring network to bring them into compliance, while no additional resources would be invested in areas with much higher pollutant concentrations but insufficient coverage. Over the long-term, one can envision a scenario in which, according to the EPA’s measurements, everyone is breathing clean, completely safe air, yet people in disadvantaged communities are still experiencing the exact same adverse health effects.

Luckily, this scenario need not come true because Dr. Wang and her colleagues not only pointed out the problem in their paper but also offered practical solutions. The researchers used the model to show that adding just 10 new monitoring sites in key areas near population centers across the United States could reduce the total population uncaptured by the current network by over 50 percent. Additionally, they suggested using mobile monitoring sites to identify concentration “hotspots.” These are small areas where the concentration of pollutants is much higher than in the surrounding region, and they can have serious health effects on many people when they occur in densely populated regions. Hotspots are also almost impossible to detect through measurements at a single site, but they can be predicted by computational models and could in principle be detected by a monitoring vehicle that could easily make many single-point measurements within densely populated areas. The EPA still only relies on single-point measurements made at fixed monitoring sites, but mobile monitoring is a feasible way to greatly expand coverage if done reliably.

The making of a model

Dr. Wang’s research is critical to ensure that environmental policies have their intended effect, and it relies on a deep foundation of computational, chemical, and physical science. Fundamental scientific and statistical principles and decades of computer science underlie the models that are necessary to make predictions and ultimately shape policy recommendations. These technical details are complicated but crucial. You can’t make a meaningful policy recommendation without a solid scientific foundation, and it’s worthwhile to understand exactly how much hard work goes into building that foundation.

UC Berkeley Professor Cesunica Ivey’s group dives deep into the computational and scientific details of atmospheric modeling yet at the same time thinks carefully about how the data from those models can shape policy and society. In the words of Dr. Ivey, “I’m just seeking to find solutions to the most challenging problems. Whatever is necessary to find that solution, that’s what I’ll do.” It turns out that what is necessary is a whole lot of time spent in front of the computer, so when I met up with Cam Phelan, a PhD student in Dr. Ivey’s group who works on the technical details of air quality modeling, we decided to chat outside.

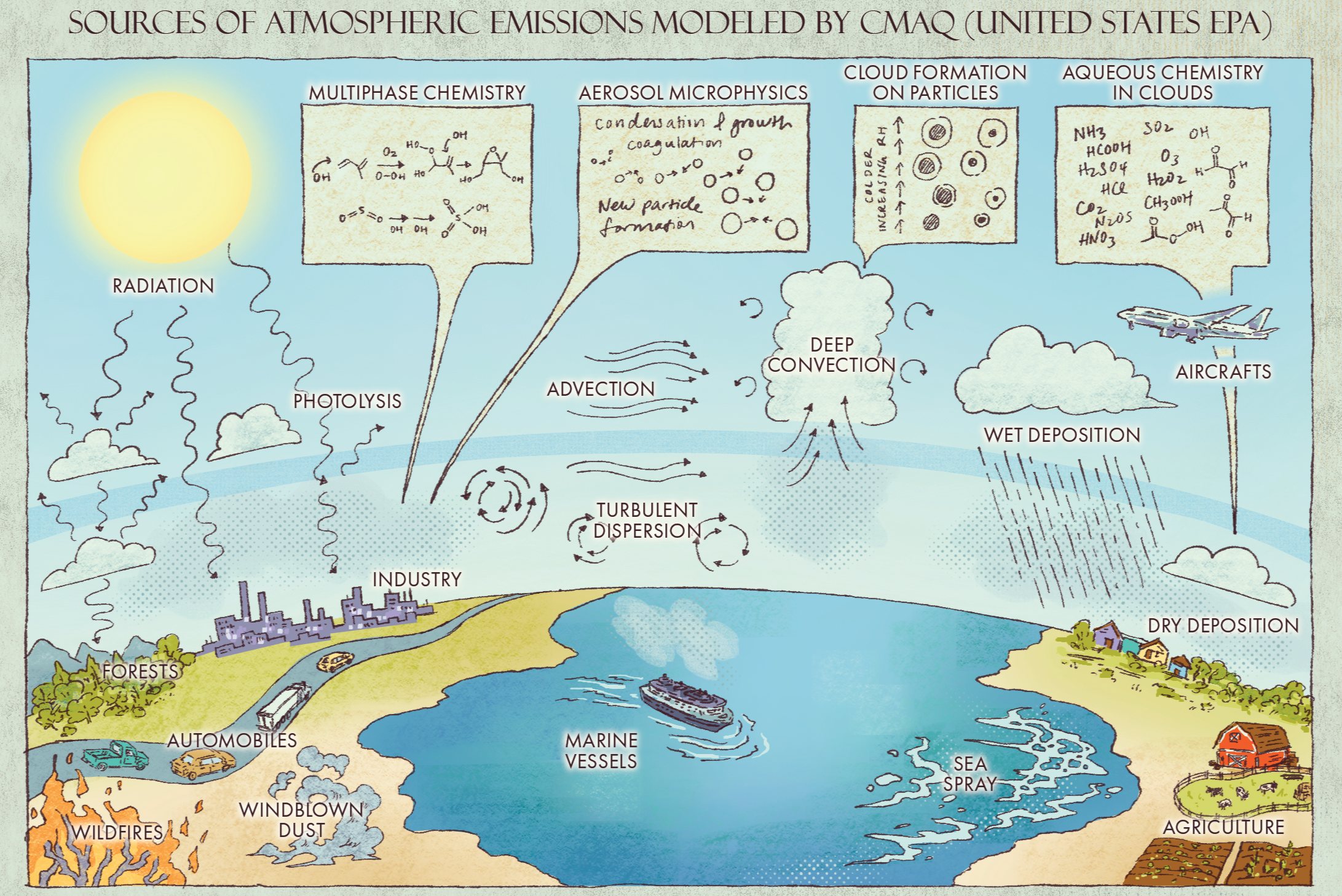

Phelan’s work focuses on optimizing and analyzing the accuracy of the Community Multiscale Air Quality Modeling System (CMAQ). It’s the workhorse of air quality modeling: a software that’s used by researchers in the United States and around the world to make detailed predictions for how proposed policy changes could affect the concentrations of pollutants. It was first developed by the EPA in 1998 and has since been improved and fine-tuned by hundreds of researchers.

If the federal monitoring network determines that a region has exceeded the NAAQS for one or more pollutants, state and local government officials will often turn to CMAQ to see if a proposed regulation will be enough to bring them back below the acceptable level. Phelan told me to think of CMAQ as “a bunch of tiny chemical reactors all flowing into and out of each other.” Essentially, CMAQ grids up the atmosphere into boxes. Each box contains a certain concentration of pollutants, and basic principles of physics tell us how the concentration of particles will change in each box over time, given a set of initial concentrations. It would already be difficult enough to run a simulation like that over the enormous volumes of space that CMAQ has to consider, but in addition to moving through space, particles also undergo chemical reactions. Therefore, it’s necessary to have a model of all the reactions that could occur between any of the species that CMAQ considers. Additionally, CMAQ needs to accurately account for each source of pollution on the ground. It needs to know about all the factories within a given box in the simulation and how much pollution they currently produce. That way, if a proposed regulation would cut the pollutant output of a set of factories by 20 percent, one can go into CMAQ, reduce those sources accordingly, and run a simulation to see what the overall effect will be.

It’s incredible that such a model is possible. CMAQ accounts for chemical reactions that occur on the molecular scale and predicts effects on areas that are thousands of kilometers across. It can do this because centuries of knowledge built up from the basic study of chemistry, physics, and engineering have gone into its development. CMAQ exists because we have the requisite understanding of our physical world that allows us to know what is important and needs to be included and what can be ignored or approximated.

Considering the vastness and complexity of atmospheric processes, it’s no surprise that even with approximations and judicious choices of inclusion, these models can only be run at a staggering computational expense. One might be tempted to think that computations on modern computers are essentially instantaneous. After all, the YouTube app on your phone can bring up hundreds of videos matching your search with seemingly no delay. But there are so many computations that go into a single CMAQ simulation that—even running on the largest and most advanced supercomputers in the world—these simulations take weeks or months to complete. Because of the computational cost, it’s worth noting that not all models are the same. CMAQ is what is called a first-principles model. That means it starts from an initial condition and follows the equations of motion given by the laws of physics and chemistry. This is fundamentally different from the type of model that Dr. Wang used in her work on PM2.5, which is called an empirical or “data-driven” model. This type of model relies on two huge sets of related data. For instance, this could be a set of satellite images and a set of pollutant measurements taken near the area shown in each image. The model then finds patterns in the data. It correlates some general features of certain images with measurement outcomes. If one then provides the model with satellite images of an area near a hypothetical measurement, the model can use the correlations it has learned to make a probabilistic guess for the outcome of the measurement. This is essentially machine learning, and it’s not so different from the way that ChatGPT comes up with the next word it will say in the answer it’s writing to you.

An empirical model can be very efficient and accurate at predicting the average concentration of one pollutant in a region over the course of a few years. But if you want more detailed information than that, you can’t use empirical models. You need to turn to first principles. That’s why, even as data-driven models improve and can be used more often, it is still important to develop models like CMAQ to balance accuracy and speed in an optimal way. And that optimization takes a lot of effort and time. It’s key to carefully control any approximation one makes and rigorously test every aspect of the model to ensure that the result is reliable. For instance, Phelan’s most recent work compared four common approximations for modeling chemical reactions to see which gave the most accurate result under specific meteorological conditions. To have a model that can be used reliably by the entire community of air quality researchers, dozens—if not hundreds—of studies like this need to be performed. They’re technical, even a bit tedious, but the final product of all those studies combined is what allows us to know what’s in the air we breathe.

When and why science should be political

Many research groups throughout the United States work on the same types of technical analyses that Phelan performs, but Dr. Ivey’s group is rare in that it also maintains a focus on applying the products of technical research directly to policy. In 2023, Dr. Ivey and Dr. Wang were authors on the same paper published in Science that looked at the impact of President Biden’s Justice40 initiative on air quality. Justice40 was a program enacted in January 2021 as part of a climate-focused executive order. It mandated that federal programs for reducing pollution (as well as several other types of programs) must invest 40 percent of their funds in disadvantaged communities. The Science article showed through modeling that Justice40 would narrow some disparities in air quality between high and low-income groups but would not affect the large racial disparities in air quality. Based on this result, they recommended that race be explicitly included as a factor in the determination of “disadvantaged communities,” as opposed to only socioeconomic data.

However, any suggestion to improve the Justice40 initiative was rendered irrelevant when President Trump, on his inauguration day, rescinded the executive order that established the program. As of mid-march, 388 employees have already been fired at the EPA. President Trump has announced his intention to cut 65 percent of the EPA’s total budget, and the EPA plans to shut down its scientific research arm. This could mean laying off as many as 1,155 research scientists. Meanwhile, billion-dollar cuts to the National Institutes of Health are currently being fought in the courts as hundreds more employees have been laid off at the National Science Foundation. If the full cuts were to take effect, they would undoubtedly severely hinder the work of air quality modelers as well as scientists across the country.

Nevertheless, Dr. Ivey is resolute that her day-to-day work will not change, stating simply that the inherent value of air quality modeling remains, no matter who is in charge. However, she does express some concern that most researchers in her field were content only to pay lip service to political problems. She tells me, “People are saying ‘justice’ and ‘equity’ a lot more, but the ratio of folks doing work like this to the people that are ‘sticking to the science’ is really small.” This seems to strike Dr. Ivey as a missed opportunity, although she also does not minimize the need for methodical research in all the branches of science integral to her field.

“Some of us need to be working on policy-oriented work,” she says, “and some of us need to be working on micro physical processes and chemical mechanisms, because all of that eventually ends up in an air quality model.”

But the main point she emphasizes to me is that the work she and her colleagues perform and the tools they build to do it are, to her, the best way to get after the fundamental goal of clean air for all people. She hopes that articles like the one published in Science with Dr. Wang, even if they can now have no tangible impact on the Justice40 initiative, can motivate scientists to move out of their comfort zone more often and focus on the political implications of their work. After some prodding to push her out of a naturally humble stance, she wrapped up our interview with the vision that work like hers could be a “new paradigm.” It is a paradigm that surely becomes more difficult to implement as President Trump targets the very notion of equity and environmental justice. In addition to rescinding the executive order that enacted the Justice40 program, Trump signed, on his inauguration day, a new executive order entitled “Ending Radical and Wasteful Government DEI Programs and Preferencing.” The order calls to “terminate, to the maximum extent allowed by law, all DEI, DEIA, and ‘environmental justice’ offices and positions.” On March 14, EPA director Lee Zeldin announced that the agency had abolished its environmental justice and diversity, equity and inclusion branches. In a post on Twitter, Zeldin states that environmental justice “sounds great in theory” but that in practice “environmental justice has been used as an excuse to fund left-wing activist groups instead of actually spending those dollars on directly remediating the specific environmental issues that need to be addressed.” In the same week, the EPA announced what it labeled as “the largest deregulatory action in U.S. history.” The EPA’s press release stated that it would reconsider dozens of long-standing environmental laws and regulations, among them the NAAQS for PM2.5, which it claims “shut down opportunities for American manufacturing and small businesses.” The EPA also announced its intention to reconsider the 2009 endangerment finding, which is a legal determination that greenhouse gases pose a risk to the welfare of future generations and provides the EPA the authority to regulate greenhouse gas emissions.

The EPA under the Trump administration intends to roll back regulations that were designed to keep the air safe to breathe. At the same time, the Trump administration describes the idea of environmental justice as “radical” and “wasteful.” Researchers like Dr. Ivey, though, would describe environmental justice as the natural result of careful and methodical science, dedicated toward the goal of clean air for all people. Dr. Ivey concluded our conversation by addressing how others might label her work, saying simply that,

“People think that when you are seeking to address issues that have political implications, the science suffers. But it doesn’t have to. It doesn’t have to if you maintain intellectual rigor.”

This article is part of the Spring 2025 issue.