Machine learning: Chapter 3

By Jonathan Liu

November 25, 2018

On July 4, 2012, scientists at the Large Hadron Collider (LHC) particle collider in Geneva, Switzerland, announced that they had discovered the Higgs boson—a fundamental particle that is believed to play a key role in giving mass to the matter in our universe. Scientists had been chasing this particle for over 40 years, and observing the birth and subsequent decay of the Higgs boson required one of the most sensitive experiments in the history of humankind. Now, scientists must fully characterize the elusive nature of the Higgs boson in order to test new theories of physics and push the field even further ahead. However, characterizing the particle is a formidable challenge that requires sifting through unimaginable amounts of complex data. LHC scientists are confronting this challenge by training computers to look for potential Higgs bosons through the process of machine learning.

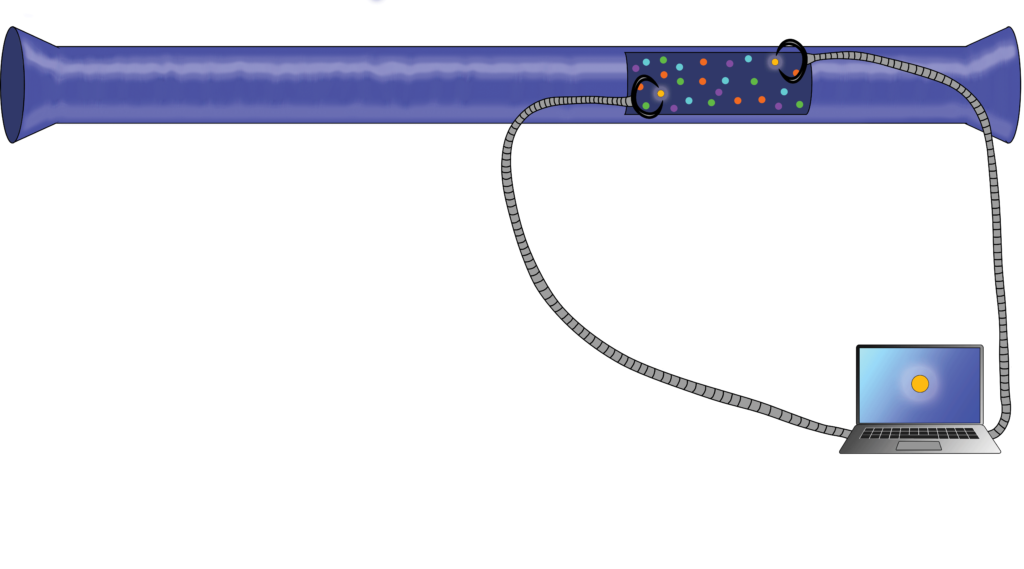

The LHC produces Higgs bosons by colliding protons together at nearly the speed of light. A Higgs boson cannot be directly detected, however, because it decays too rapidly. Instead, one must infer its brief existence by examining the energy properties of the particles produced from its decay. Within the massive shower of particles following a proton collision, only a few might actually contain the evidence of a Higgs boson decay—the chances of producing a Higgs boson is only one in a trillion—so the scientists must carefully comb through all of the particle data, which is often obscured by high background signals from other particles generated by the collision.

While both humans and computers can accurately identify Higgs bosons, computers have the advantage of being able to efficiently work through the large volumes of data generated by the LHC.

While both humans and computers can accurately identify Higgs bosons, computers have the advantage of being able to efficiently work through the large volumes of data generated by the LHC.

The trained human eye is able to look at an individual dataset and pick out true Higgs bosons, but the LHC produces over 30 petabytes of data a year, which is more than a single human can process in an entire lifetime. Under these circumstances, studying the Higgs boson seems nearly impossible, which is why LHC scientists are using machine learning algorithms to identify potential particles from their datasets.

Machine learning combines human-like accuracy with computer-like processing speed, and the net result is that more of the Higgs boson data can be mined for precious signals with limited waste. “We’ve gained between 50 to 100 percent efficiency with these new technologies,” says Jennet Dickinson, a fourth-year physics graduate student who works with Professor Marjorie Shapiro at UC Berkeley. Dickinson is part of the ATLAS group, which is one of the experimental collaborations at the LHC. Along with other Berkeley scientists, she works on developing more efficient machine learning techniques for sifting through LHC data.

Before scientists at the LHC turned to machine learning, they relied on crude analysis methods to sort through the large volumes of data. That method resulted in potentially useful material being thrown away, however, because the data can be ambiguous, resulting in signals that look more like “maybe a Higgs boson” than “definitely a Higgs boson.” Because accidentally studying a particle that is not a Higgs boson could contaminate analysis, these filtering methods throw out a lot of ambiguous data, which may contain signatures of real Higgs particles, to retain only the strongest results. Higgs bosons are already rare, so these filtering methods are insufficient for the precise characterization needed to test new theories of particle physics. It is easy to see how machine learning has changed the world of particle physics: if these previous methods were akin to searching for gold by keeping only bright objects, then machine learning-based methods are more like sifting through grains of dirt for individual pieces of gold.

Machine learning is continuing to prove useful at the LHC since the initial detection of the Higgs boson in 2012. Just this past year, LHC scientists observed Higgs boson production from the decay another type of fundamental particle, the top quark. This rare Higgs production mechanism differs from the original proton experiment and had never been observed previously, primarily due to limitations in analysis techniques. Machine learning has allowed LHC scientists to make full use of their precious data and turn their attention to new challenges in particle physics. Equipped with these sophisticated machine learning techniques, particle physicists are optimistic about being able to push physics to new frontiers in the years to come.

Jonathan Liu is a graduate student in physics.

This article is part of the Fall 2018 issue.